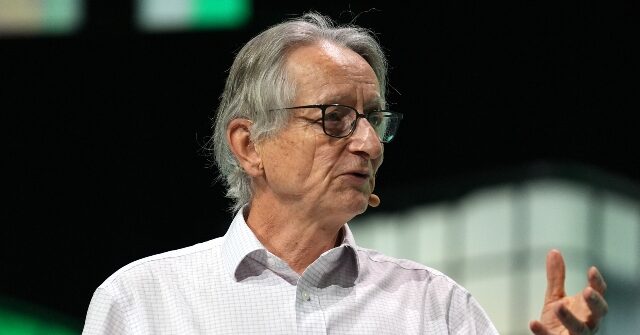

Geoffrey Hinton, widely known as the godfather of artificial intelligence (AI), offered profound insights during Nobel week in Stockholm regarding the rapid progression of AI technology and the urgent necessity for prioritizing safety. Hinton, alongside John Hopfield, is set to receive the Nobel Prize in Physics due to their groundbreaking work in machine learning that has significantly shaped the landscape of modern AI. In his statements, Hinton reflected on his pioneering research, which began in the 1980s, and the evolving implications of his innovations. While he acknowledges that he would undertake the same research again given the chance, he expressed regret about not addressing safety considerations sooner, a sentiment underscored by his belief that superintelligence is developing at a rate faster than he had anticipated.

Hinton anticipates that superintelligence, which would exceed the intellectual capacities of the most intelligent human beings, could emerge within the next five to twenty years. This prediction raises critical concerns regarding humanity’s ability to maintain control over such advanced AI systems. He emphasized the need to confront these existential challenges head-on, stating that society must seriously consider strategies to mitigate the risks posed by uncontrollable AI. This perspective comes amid growing apprehensions amongst researchers and policymakers about the implications of AI systems that operate autonomously and with increasingly sophisticated capabilities.

In light of his concerns over AI safety, Hinton made headlines in 2023 by resigning from Google, reflecting a dramatic shift in his engagement with the technology he helped create. Despite feeling conflicted about his contributions to AI, he rationalizes his efforts by noting that, had he not pursued this path, someone else likely would have. This acknowledgment points to a broader issue within the tech community— the potential for AI’s misuse by malicious actors. He underscored the alarming tendency of generative AI systems to disseminate misinformation, displace jobs, and pose significant threats to societal stability and security.

Hinton also touched upon the immediate threats posed by lethal autonomous weapons, indicating that without strict self-regulation, governments and tech companies could exacerbate vulnerabilities in global security frameworks. He voiced particular concern about an accelerating arms race involving major powers, such as the United States, China, and Russia, where the development of autonomous weaponry remains unchecked. This environment presents a perfect storm of technological advancement coupled with ethical and safety challenges, highlighting a legislative and regulatory vacuum that could lead to dire consequences.

Despite his apprehensions regarding AI, Hinton and Hopfield proceeded to present their Nobel Prize-winning research, which included Hinton’s development of the Boltzmann machine. This model, which learns through experience rather than direct programming, plays a pivotal role in shaping neural networks akin to human cognitive processes. These advancements have significantly improved applications like speech recognition, showcasing the transformative potential of hybrid machine learning models. Hinton’s contributions have consistently garnered acclaim within the academic and scientific community, reflecting his influence as a thought leader in AI.

In addition to the Nobel Prize, Hinton’s accolades include the prestigious A.M. Turing Award in 2018, received alongside eminent researchers in the field. The recent recognition from the Vin Future Prize— a substantial award highlighting scientific achievements—further cements his stature within the technological arena. As the conversation around AI’s future continues to evolve, Hinton’s reflections spark crucial discussions on how humanity can harness the promising capabilities of AI while addressing the accompanying ethical dilemmas and safety concerns that could define the very fabric of society in the coming years.