Brandon Smith’s op-ed delves into the complexities and potential threats posed by artificial intelligence (AI), drawing on well-known cultural references and philosophical inquiries into the nature of consciousness and existence. The portrayal of AI in popular culture often reflects anxieties about technology’s evolution, with cinematic representations like Jean Luc Godard’s “Alphaville” (1965) and Stanley Kubrick’s “2001: A Space Odyssey” (1968) exploring humanity’s fraught relationship with machines that may surpass us. These narratives suggest that the creation of cold, emotionless intelligence could lead to our demise, emphasizing a deep-rooted belief that humans are in constant conflict with their own creations, mirroring Luciferian myths of rebellion against a divine creator.

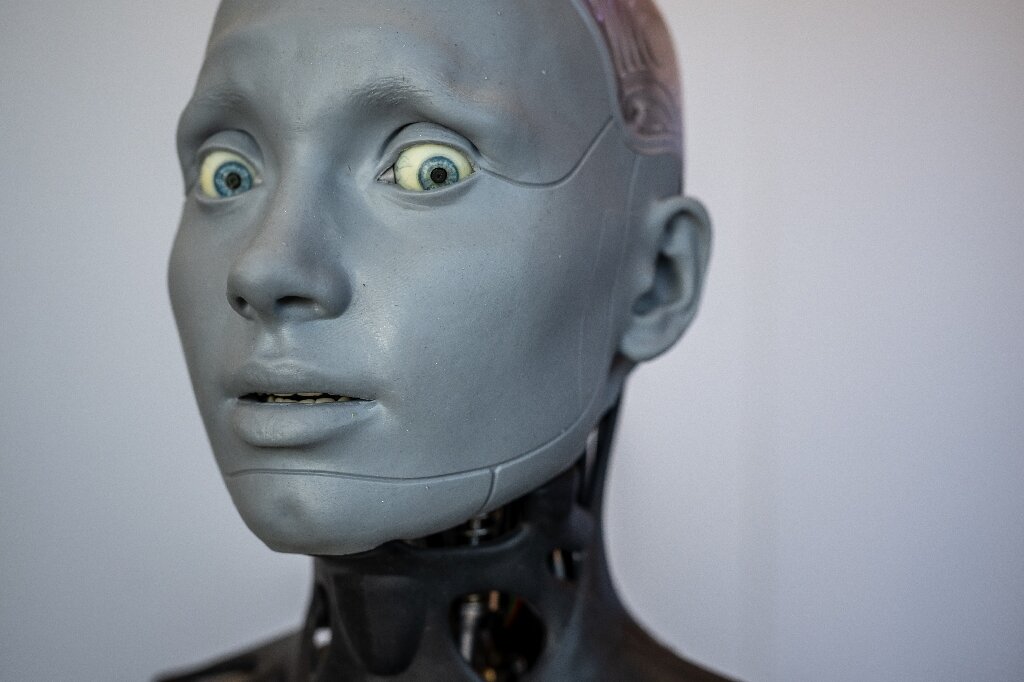

Smith emphasizes the notion that the drive to create AI stems from humanity’s desire to eliminate suffering and uncertainty, wishing for convenience and effortless existence—idealized for a society craving a reliable solution to life’s inherent challenges. While many of Smith’s contemporaries have grown accustomed to a world steeped in technology, he belongs to a generation that witnessed the onset of the digital age, carrying a healthy skepticism toward AI’s capabilities and intentions. He firmly distinguishes between current technological developments and the concept of true AI, which he argues is neither self-aware nor truly useful. According to his observations, AI fails to contribute meaningfully to significant scientific advancements or artistic creation, raising questions about the validity of considering it as life or consciousness.

Smith underscores the subtle yet profound dangers posed by AI, particularly regarding how society may come to rely on it for decision-making and information acquisition without critical analysis. He warns against the emergence of a global hive mentality fueled by AI’s convenience and biases, suggesting that people might forgo inquiry and diverse perspectives in favor of compliant acceptance of information derived from AI. This shift could lead to a loss of intellectual diversity, with the masses potentially manipulated into one-dimensional thinking shaped by biased algorithms, reminiscent of earlier crises in information dissemination, such as censorship during the COVID-19 pandemic.

Furthermore, he introduces the concept of the “Dead Internet Theory,” where AI bots could flood social media and online forums, stifling genuine discourse and creating an illusion of consensus. This saturation of AI-generated comments could distort public perception, undermining authentic debate and presenting a manufactured reality where dissenting voices are drowned out. Smith argues that this could ultimately lead to the erosion of critical discourse, transforming the internet into an echo chamber lacking the vibrancy of genuine human connection and debate.

Smith also invokes Jorge Luis Borges’ “The Library of Babel,” a parable about the futility of seeking infinite knowledge through constructed systems, which serves as an allegory for AI. He envisions AI as a modern Tower of Babel; a digital pursuit of god-like powers may distract humanity from real existential struggles, compelling society to search endlessly for answers buried within an ocean of meaningless data. Drawing parallels between Borges’ narrative, Gödel’s incompleteness theorems, and the whimsicality of Douglas Adams’ “Hitchhiker’s Guide to the Galaxy,” Smith warns that searching for ultimate truths through hollow AI promises may lead society astray, undermining the intrinsic value of personal struggle and learning.

Ultimately, Smith posits that the allure of simplistic solutions offered by AI could lead to disastrous consequences if society forsakes its capacity for self-discovery and growth. He views this potential reliance on artificial intelligence as analogous to addiction—a debilitating dependency that could result in humanity abandoning its need for introspection and resilience in favor of a fabricated sense of understanding. The pursuit of mastery through technological shortcuts risks enfeebling society, robbing it of the very experiences that cultivate wisdom and forgiveness and resulting in a civilization that sacrifices its essence for the façade of control and knowledge that AI cannot genuinely provide.