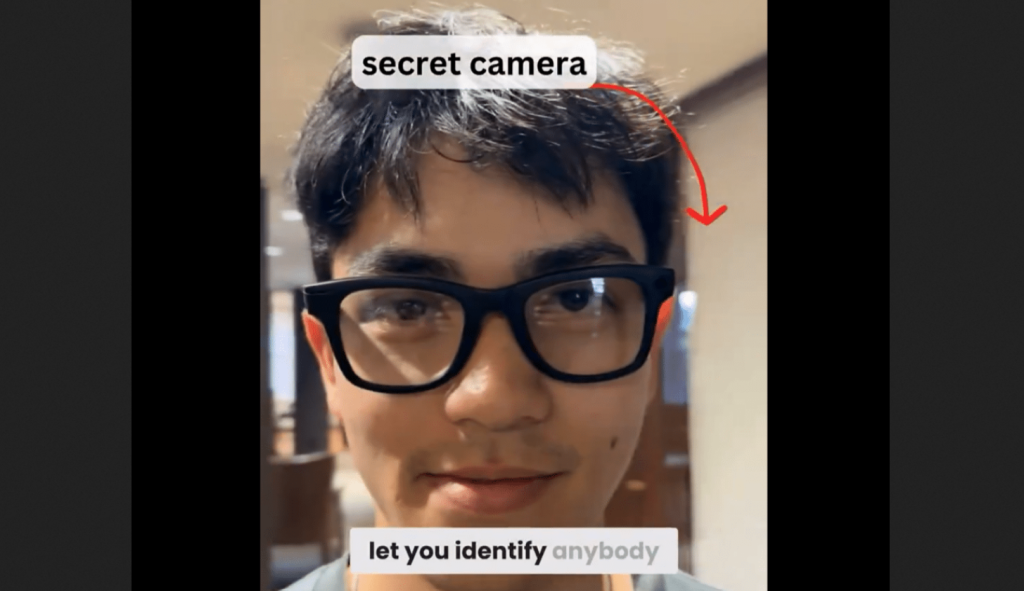

In a groundbreaking move, two Harvard students, AnhPhu Nguyen and Caine Ardayfio, have captured public attention by creating a tool that uses Meta’s smart glasses to automatically identify people’s faces through facial recognition technology. The tool, equipped with an integrated camera, streams live video to Instagram and employs their software, I-XRAY, to process the footage. With the help of artificial intelligence, the program detects faces and searches the internet for additional images and personal data—ranging from names to home addresses—derived from various online sources including articles and voter registration databases. This process can efficiently reveal personal information in under two minutes, which raises significant questions about privacy in our increasingly surveillance-oriented society.

While Nguyen and Ardayfio assert that their project aims to highlight the risks associated with widespread technological access, their approach has garnered backlash from companies like PimEyes, a facial recognition service they utilized in their project. PimEyes’ Director, Giorgi Gobronidze, expressed concerns that the demonstration not only oversimplifies the complexities of privacy issues but may also inspire malicious entities to replicate their methodology. This revelation foreshadows potential negative ramifications as more individuals could misuse accessible technologies without considering ethical implications. Despite warnings of potential harm, the students emphasize their goal is to provoke discussion about privacy rather than to promote harmful behavior.

PimEyes has since implemented additional measures to prevent misuse of their services, including banning user accounts linked to Nguyen and Ardayfio’s project and tightening security protocols. The company stated that while the project exploits their technology, it importantly does not breach their terms of service primarily because of the lack of API integration with the project. Simultaneously, Gobronidze noted that a broader scale application of facial recognition technology could utilize more advanced tools which have proven to be significantly more accurate than PimEyes’ service, indicating that straightforward operational integration into their platform is not feasible at scale.

The situation raises pressing questions about the ethical use of facial recognition technology, particularly its implications in law enforcement. Reports of law enforcement using similar services, such as those conducted in New Zealand and the UK, underline a troubling trend that acknowledges the utility of these tools while simultaneously pointing to a lack of regulatory oversight. For example, audits in New Zealand revealed that police accessed PimEyes’ service hundreds of times before restrictions were imposed. Such practices underscore a growing reliance on facial recognition technologies by government authorities, which can lead to further complications and societal acceptance of mass surveillance.

Across the globe, PimEyes is facing scrutiny amid rising concerns regarding law enforcement’s use of their technology in multiple countries, including Australia and Germany. After discovering unauthorized access by police forces in the UK, groundbreaking changes aimed at compliance and ethical practices were initiated by PimEyes, focusing largely on data minimization. This response illustrates the challenging balance between technological advancement and ethical considerations, especially as tools like facial recognition become more commonplace in societal functions, such as law enforcement, without robust oversight.

As personal data continually becomes easier to access through innovative but unregulated technologies, individuals are encouraged to reflect on their digital privacy and the implications of surveillance. The actions of Nguyen and Ardayfio serve as a stark reminder of the fragile nature of personal privacy in the digital age and the evolving conversation surrounding ethical technological usage. As the landscape of technology continues to shift, it becomes vital for society to engage in ongoing discussions about the appropriate measures necessary to protect individual rights amid these advancements, ensuring that users remain aware of how their data can be accessed and utilized by others—whether for benevolent or malicious intent.