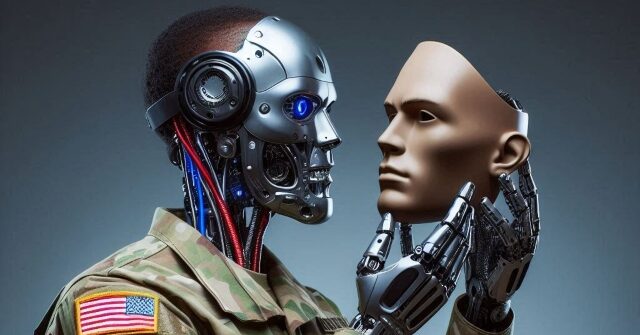

The U.S. military is now pursuing the development of sophisticated artificial intelligence (AI) with the capability to create highly realistic fake online personas, as revealed in a recent procurement document reviewed by the Intercept. This initiative is part of the outreach from the United States Special Operations Command (SOCOM), seeking private sector expertise to produce these convincing deepfake internet users. According to a 76-page document issued by the Department of Defense’s Joint Special Operations Command (JSOC), the military aims to employ advanced technologies for its elite operations, underscoring a marked interest in capabilities that can successfully simulate human behavior and appearance in a digital landscape.

The JSOC’s specifications demand the generation of online personas that not only embody recognizable human traits but also exist solely within a virtual framework. These fabricated identities are expected to encompass a diverse array of expressions, alongside high-quality identification photos, background imagery, and both video and audio elements. The ambitious goal is to engineer virtual environments that remain undetectable to social media algorithms, featuring elements such as selfie-style videos that capture the fakes in dynamic settings, complete with relevant backdrops and interactions.

This approach to social media manipulation is not unprecedented; the Pentagon’s history includes the deployment of fake social media users to push specific narratives or agendas. Recent actions by major social media platforms like Meta and Twitter have involved the shutdown of propaganda networks affiliated with U.S. Central Command, which relied on artificially created accounts showcasing forged identity visuals. The military’s fascination with deepfake technology reflects its ongoing efforts to utilize audiovisual deceptions for various purposes, including influence operations and disinformation campaigns. Such advancements hinge on sophisticated AI and machine learning methodologies capable of analyzing extensive databases of human features to create immersive and believable digital representations.

While the U.S. military is exploring these innovative tools, it simultaneously issues warnings against similar practices used by rival nations. National security experts and intelligence officials have characterized the deployment of deepfake technology by foreign adversaries as a critical risk, urging vigilance against this emerging threat. The combined efforts of agencies such as the NSA, FBI, and CISA underscore the acknowledgment of synthetic media and deepfakes as significant challenges, declaring their proliferation a “top risk” for 2023 and beyond. This contrast raises ethical questions about the U.S.’s dual stance—advocating against deepfakes when produced by others while developing its capabilities in this nascent domain.

Critics of this military initiative express serious concerns over the potential implications of such technology. Experts posit that there are no legitimate applications for deepfake technology outside of manipulation and deception. Heidy Khlaaf, the chief AI scientist at the AI Now Institute, emphasizes the risks associated with state-sponsored deepfake ventures, suggesting that such undertakings will likely inspire other militaries and adversaries to follow suit. As the capability for generating believable fake identities improves, the inherent challenge of discerning truth from artifice in the digital realm could pose significant societal ramifications.

Ultimately, the military’s interest in harnessing AI for the creation of deceptive online personas exemplifies the complex and multifaceted relationship between technology, statecraft, and societal integrity. As military and intelligence entities forge ahead with AI-driven deepfake capabilities, the broader implications for information authenticity, public trust, and the balance of power in digital communications demand careful consideration. The urgent conversations surrounding the ethical applications of such technology will likely persist as both national security strategies and technological advancements evolve in tandem, forging a precarious balancing act between innovation and accountability in the information age.