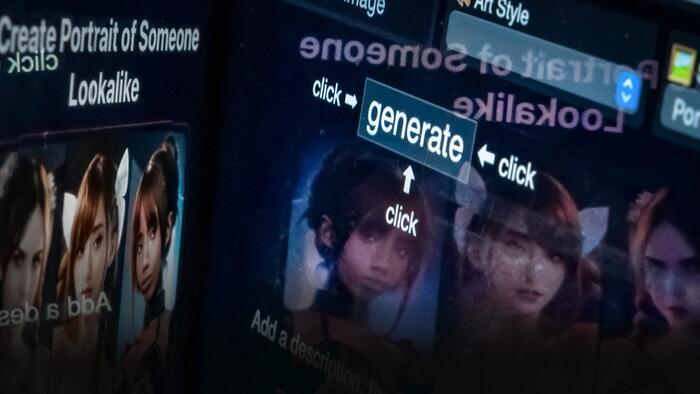

In recent years, educational institutions have faced a stark transformation in the challenges they must address, transitioning from relatively benign incidents of student mischief, like doodling on teachers or creating humorous images, to confronting serious threats posed by artificial intelligence (AI). The emergence of AI technology has led schools to develop urgent emergency response plans due to the potential for sexually explicit AI-generated images of students or teachers to surface on social media. This shift highlights a broader concern regarding the misuse of deepfake technology—defined as non-consensual AI-generated content that can produce sexual imagery or misinformation. As incidents of deepfakes proliferate, including cases where school principals have been depicted using racist and violent language in AI-generated videos, school leaders and policymakers are grappling with the implications of these technologies on safety and reputational integrity in educational environments.

The ramifications of deepfakes have led to a surge in legislative action across the United States. For instance, in September 2023, California Governor Gavin Newsom signed a bill criminalizing AI-generated child pornography, making it a felony to possess or distribute such content. Similar laws have been enacted in other states like New York, Illinois, and Washington, reflecting a growing national recognition of the threat posed by deepfake technology. Furthermore, at the federal level, Senator Ted Cruz has introduced the Take It Down Act, aimed at prohibiting the intentional disclosure of non-consensual intimate visual depictions. These legislative measures signify an urgent need to address the harms inflicted by deepfakes, especially concerning the vulnerability of minors in educational settings.

Despite legislative efforts, the educational community grapples with the challenge of responding to incidents of deepfakes effectively. Authorities have reported instances where school staff have been impersonated, leading to significant disruptions. A notable case at Maryland’s Pikesville High School involved the creation of a fake audio recording of the school principal, leading to a police investigation and the arrest of an athletic director who sought to retaliate against the principal. As AI technology continues to develop, experts emphasize the need for robust incident response mechanisms and preventive measures to safeguard students and staff. The development of watermarking systems for AI-generated content is one potential avenue for tackling these challenges, but comprehensive technical solutions are still lagging behind the rapid advancement of AI tools.

In specific incidents, students have exploited AI to create misleading or harmful content. In one alarming event at a high school in Carmel, New York, students used AI to produce deepfake videos impersonating a middle school principal, which were subsequently posted on social media. School authorities were able to identify the students involved through their social media accounts, resulting in disciplinary action under school conduct guidelines, though no criminal charges were filed. Parents expressed deep concern regarding the implications of such actions, emphasizing the real fear and anxiety created within the student body, especially vulnerable groups who may feel targeted by these distorted representations.

As schools continue to confront the implications of AI-generated deepfakes, the emotional toll on students must not be overlooked. A parent from Carmel, whose child was affected by the deepfake incident, shared her fears for the psychological impact on seventh graders who should not be burdened with such adult concerns. The anxiety triggered by these events is compounded by the increasing frequency of lockdowns and campus safety drills, contributing to a pervasive environment of fear that detracts from the educational experience. Parents have called for greater transparency and communication regarding safety incidents within the school system, emphasizing that awareness and openness can help mitigate the pervasive anxiety stemming from AI-related disturbances.

The urgency surrounding the need for clearer policies and effective measures to address deepfakes in the education sector is palpable. While schools and legislators work toward minimizing future risks, there remains a collective responsibility to educate students about digital literacy and the ethical use of technology. As AI continues to evolve at an unprecedented pace, fostering an environment that promotes responsible use and critical thinking skills among students will be paramount in navigating this uncharted territory. Building resilience against the harmful effects of deepfakes and misinformation will require strong collaborative efforts between educators, parents, lawmakers, and technology experts to create safer and more supportive educational environments.