On Thursday, O’Keefe Media Group (OMG) released additional footage featuring Meta engineers discussing the company’s content censorship practices. This follows a previous unveiling that highlighted the automatic demotion of anti-Kamala Harris posts on platforms like Facebook and Instagram. In an undercover interview, Meta Senior Software Engineer Jeevan Gyawali stated that users who post negative content about Harris are not notified of the censorship; instead, they might only notice a decline in engagement metrics. This automatic censorship is managed by Meta’s “Integrity Team,” which utilizes a system of “civic classifiers” to effectively execute what is described as “shadowbanning,” where posts are suppressed without any direct communication to the users affected.

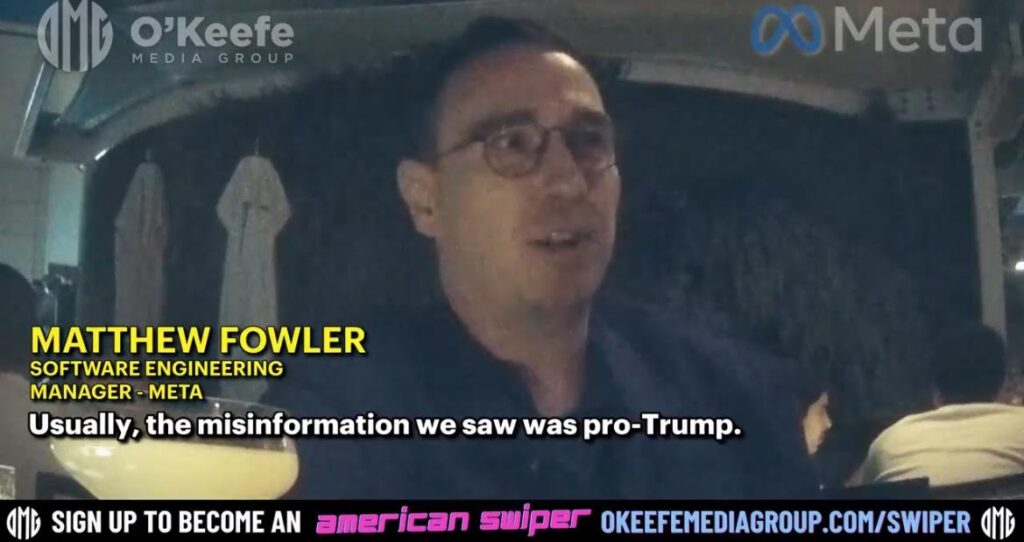

Further discussions revealed that the same censorship processes are applied to pro-Trump posts, which are also systematically investigated and removed by a dedicated team. Matthew Fowler, a Software Engineering Manager at Meta, confirmed this during his interaction with the OMG journalist, elucidating that the platform’s processes often rely on mainstream media outlets for verification, which raises questions about the objectivity of the censorship. Fowler emphasized that the decision-making about what constitutes disinformation is heavily influenced by the news landscape, insinuating that Meta’s actions might be swayed by prevailing media narratives rather than an impartial assessment of the content.

Plamen Dzhelepov, a Machine Learning Engineer at Meta, also weighed in on political biases within the company, noting that the firm held the right to suppress any content, thus suggesting inherent political favorability in their practices. Dzhelepov acknowledged that targeting certain political views, particularly those of “right-wing conspiracy theorists,” is part of Meta’s operational mandate. This reinforces a concern that users with conservative viewpoints are disproportionately affected by the censorship mechanisms, raising ethical questions about the impartiality of content moderation.

Echoing this sentiment, Michael Zoorob, a Data Scientist at Meta, elaborated on the fact-checking process, stating that a significant portion of claims labeled as false by fact-checkers tends to originate from conservative sources. This admission speaks to a systemic bias at play within Meta’s content moderation strategies, contributing to the suppression of conservative voices under the guise of maintaining factual integrity. Zoorob’s remarks highlight the troubling reality that the filtering systems may disproportionately filter out conservative narratives, thereby stifling a diverse range of opinions in digital spaces.

When discussing how misinformation is addressed, Dzhelepov described a dual mechanism: content is either censored outright or labeled with a community note that indicates its questionable authenticity. He also mentioned that certain voices are actively suppressed, indicating a clear awareness among Meta employees of the ramifications of their policies on public discourse. Zoorob recounted specific instances, such as the downranking of the Hunter Biden laptop story according to an FBI advisory, which underscores the potent influence of external entities on Meta’s content moderation decisions and practices, raising concerns about the integrity of information dissemination on the platform.

In summary, the revelations shared by O’Keefe Media Group illustrate a concerning scenario where Meta’s internal practices suggest a systematic bias against conservative viewpoints. The testimonies of Meta engineers highlight not only the organization’s reliance on media narratives for content moderation but also raise ethical dilemmas surrounding the suppression of free speech. As the censorship of political content continues to be a focal point of discussions on social media governance, these insights provoke deeper questions about the role of technology companies in shaping public discourse and the potential implications for democratic engagement in the digital age.